Optimal Renormalization Group Transformation from Information Theory

PDF

Journal

arXiv

PDF

Journal

arXiv

P. M. Lenggenhager, D. E. Gökmen, Z. Ringel, S. D. Huber, and M. Koch-Janusz

Phys. Rev. X 10, 011037 (2020) – Published 14 Feb 2020

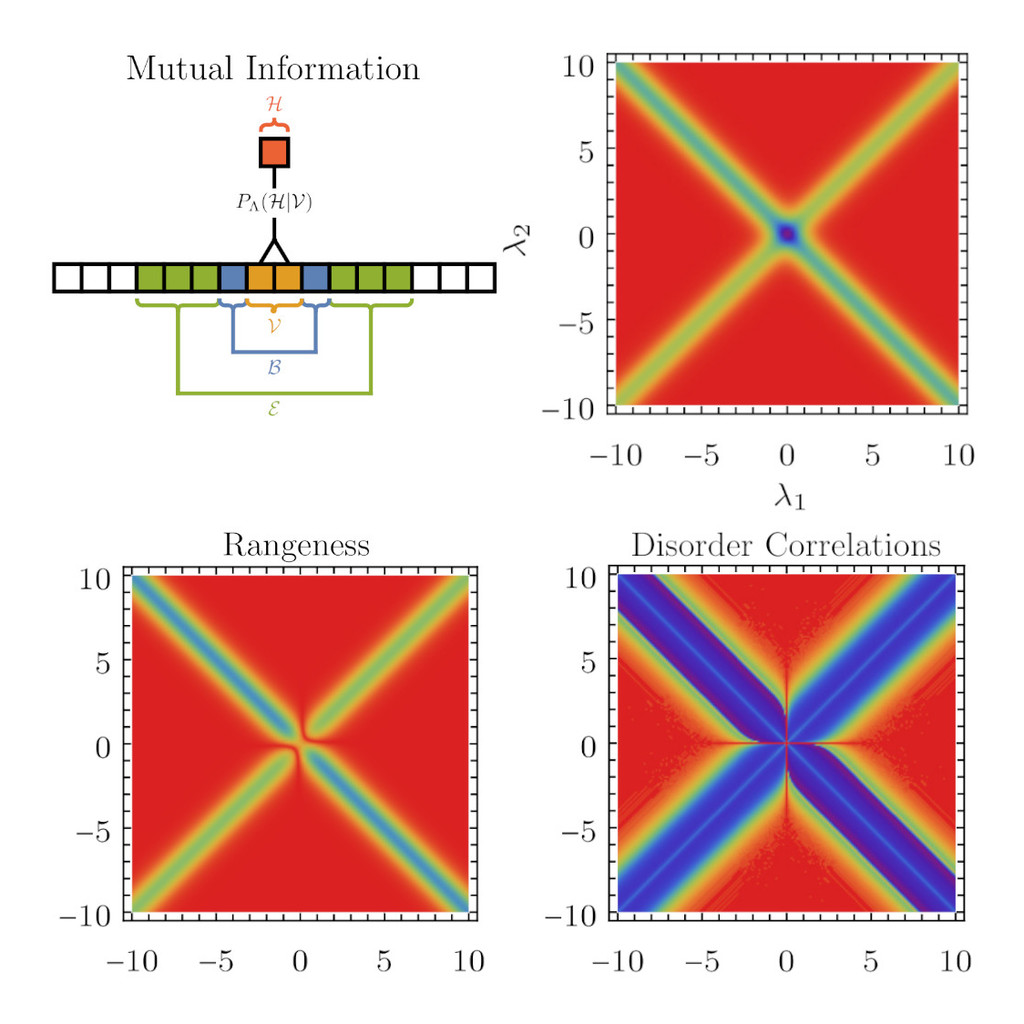

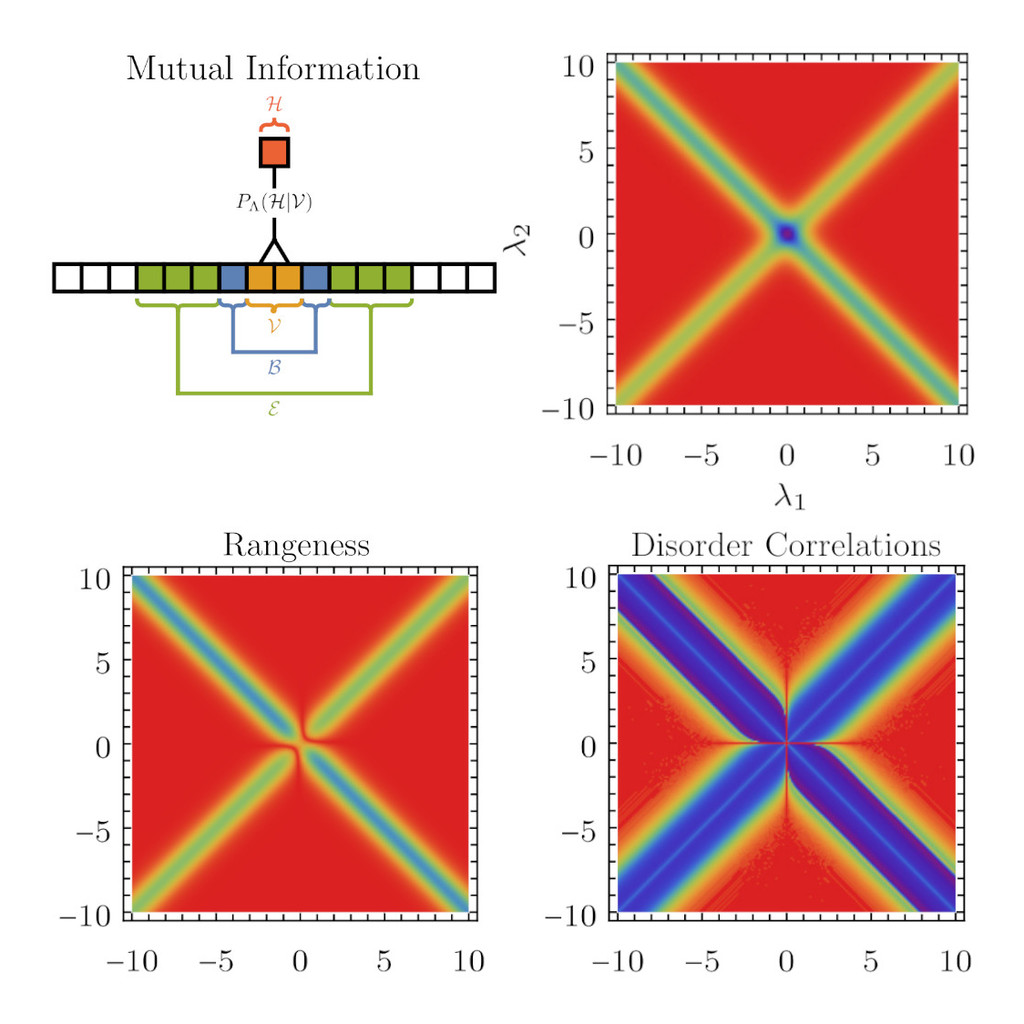

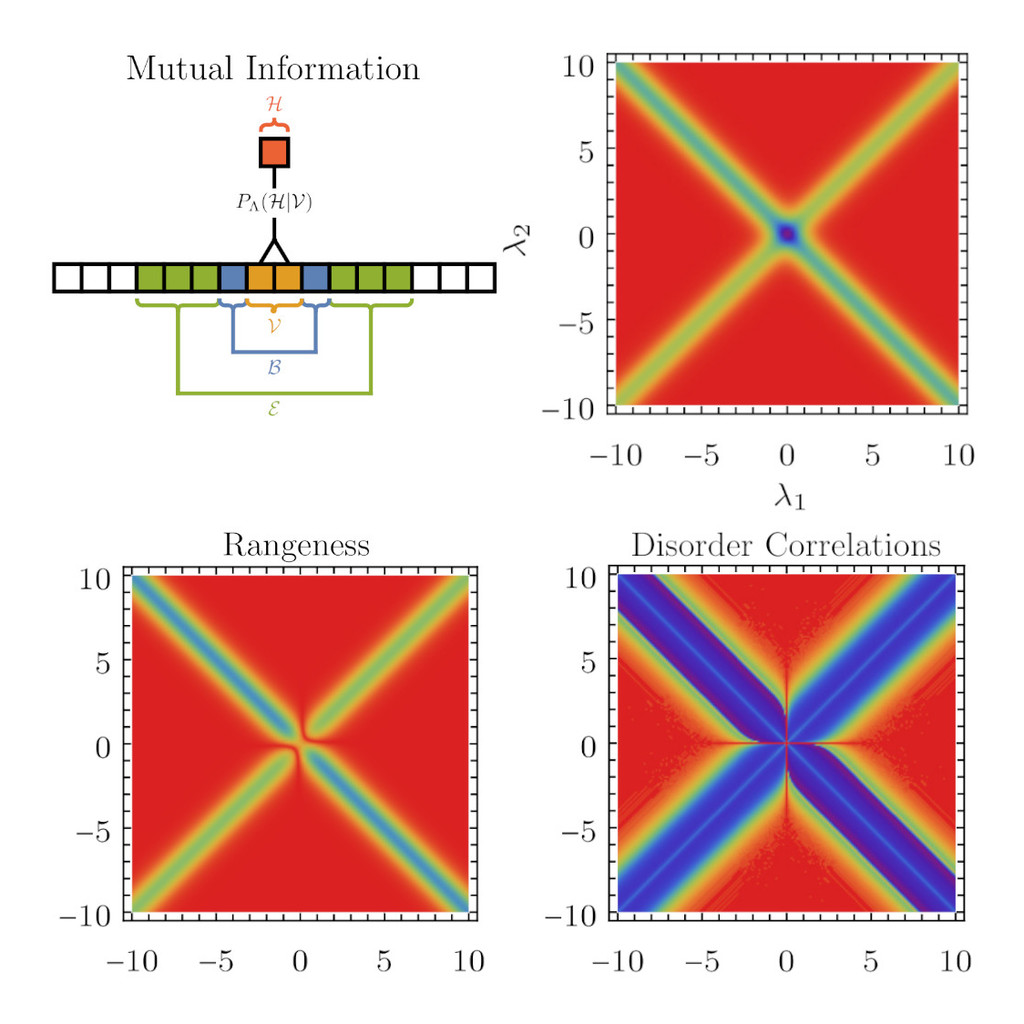

We consider a recently introduced real-space renormalization group (RG) algorithm which is based on maximizing real-space mutual information (RSMI). Based on general proofs and detailed studies of arbitrary coarse-grainings of the Ising chain, we argue that maximizing RSMI minimizes the range of interactions and, for disordered systems, correlations in the disorder distribution of the coarse-grained system.

Abstract

Recently, a novel real-space renormalization group (RG) algorithm was introduced. By maximizing an information-theoretic quantity, the real-space mutual information, the algorithm identifies the relevant low-energy degrees of freedom. Motivated by this insight, we investigate the information-theoretic properties of coarse-graining procedures for both translationally invariant and disordered systems. We prove that a perfect real-space mutual information coarse graining does not increase the range of interactions in the renormalized Hamiltonian, and, for disordered systems, it suppresses the generation of correlations in the renormalized disorder distribution, being in this sense optimal. We empirically verify decay of those measures of complexity as a function of information retained by the RG, on the examples of arbitrary coarse grainings of the clean and random Ising chain. The results establish a direct and quantifiable connection between properties of RG viewed as a compression scheme and those of physical objects, i.e., Hamiltonians and disorder distributions. We also study the effect of constraints on the number and type of coarse-grained degrees of freedom on a generic RG procedure.